My thoughts on AI start with an article by a former Australian chief scientist. After throwing out a few examples for the potential uses of AI technologies (e.g. designing powerful chemical toxins, replacing actors with their likenesses without their consent, perpetrating undetectable scams, spreading misinformation during elections and building autonomous weapons), the author moved right on to the subject of how to ‘regulate’ AI technology.

Why is this style of critique so popular? Possibly it is because humans have evolved to resist change, since one hypothesis suggests that before the Industrial Revolution, it was statistically reasonable to assume that all change would have negative results; hence, opposition to change became a hard-wired heuristic.

However, since the Industrial Revolution, a small fraction of society has learnt to over-ride their instincts and embrace change so as to personally benefit by quickly adapting and exploiting change. A nice example in the context of AI is the hundreds and possibly thousands of start-ups that are being funded by venture capital to exploit AI technologies for specific applications. A specific example in chemistry is worth noting: many start-ups are being funded for the use of AI in drug discovery. Nothing scary there – they are just using AI technologies to improve the odds of finding medically useful pharmaceuticals. Of course, if they are very successful, many jobs in the pharmaceutical R&D supply chain might be displaced, but one likes to think that the highly intelligent and skilled individuals in these jobs will be able to repurpose themselves fairly quickly.

The usual experts in predicting the future have forecasted that the jobs most likely at risk from AI technologies are those where an employee spends most of their time in front of a computer monitor. Honestly, I think that might be a good thing. Some years back I did a ‘time and motion’ study of my own work life and discovered that an unhealthy amount of my time was being spent looking at a screen – so I changed my life. Of course, I am looking at a screen as I type this, the alternative being to handwrite a draft, or to use an actual typewriter. Or I could have jotted down some bullet points and asked ChatGPT to do the heavy lifting to create all the filler words in between. I have tried that, and the results have not been impressive. In fact, the last time I wrote an article, I was asked by the editorial staff to reduce it from 1200 to 800 words; even for this task ChatGPT was completely useless – it disembowelled the meaning and readability of the essay while apparently also being unable to count words (I said 800 not 565 words).

On four counts I am not the least bit worried about AI technologies: progress in AI technologies will likely be a lot slower than people imagine, giving society ample time to adjust to each new breakthrough in capability; AI technologies will help humans break away from their work-enforced screen time (what they then do with their extra free time is on them); with a bit of luck, someone will apply AI technologies to a next-generation spelling and grammar checker for a word processor that is substantially better than that touted by Microsoft; and I like change.

Alan Turing famously posed the challenge for what was to become the AI-driven machine – the Turing test proposed that if a human evaluator can be fooled by a machine, then we have achieved a machine that can ‘think’ (which has been equated to the creation of AI). I don’t suppose Turing ever stopped to think about the distribution of human capabilities when it came to being fooled. In any case, I am sure we have all by now read articles that have been generated by ChatGPT and its ilk and are none the wiser as to whether they were human- or machine-generated. I don’t think that means that ChatGPT can ‘think’ or is an Orwellian threat to society. When AI software competes with humans for resources or demands a bank account, that’s when we should get worried. Until then, AI is there to make our lives easier and simpler by doing things for us that we prefer not to do, for one reason or another.

In fact, many researchers are already adopting AI for the writing of reports and papers. As many of you are aware, academic researchers are very much slaves to their h-indices – an obtusely simple approach to measuring the impact of academic research that simply counts citations to an academic’s papers by other academic researchers. If an h-index even has a second-order correlation to research impact, I would be very surprised.

Michael J. Douma, a well-known historian and polymath, published research into academic papers and concluded that (bit.ly/3QbJZGn):

… a scholar who writes articles in recognisable journals, even lower ranked ones, should expect that each article will be read about 100 times. If you are lucky and good, it might be read 1000 times. In the top, say 50 history journals, there are probably no articles that after 10 years have not been read at least a few dozen times.

In this context, and reintroducing AI into the discussion – if hardly anyone is reading your papers, then why not turn over the writing of them to AI?

Last year, after a 30-year hiatus, in my role as a visiting professor at the University of Technology Sydney, I published two papers in a peer-reviewed journal. The experience was completely underwhelming, and I have a new-found sympathy for academic researchers. The research outcomes were first published as precis on LinkedIn, where we generated a substantial amount of commentary and feedback. After months of delays, the full papers were accepted for publication in the peer-reviewed journal – we have not received a single item of feedback as yet, other than from the peer reviewers. And we first had to endure the peer-review process. Given my experience with the low quality of the reviewing process, wouldn’t publishers be better off employing AI to simulate peer reviewers?

An area where I am certain AI is being used is in the generation of educational material by academics. I know of an academic who has decided to take the ‘working from home’ vibe to its extreme. He is now using AI software as the basis of his undergraduate lectures (which are delivered remotely of course). If an audience can’t tell the difference, then does it matter? All he has managed to achieve is a high level of work efficiency, where he has a lot more leisure time than his colleagues. Eventually everyone will catch up with him and he will be paid less for this effort, commensurate with the work effort required by the AI route.

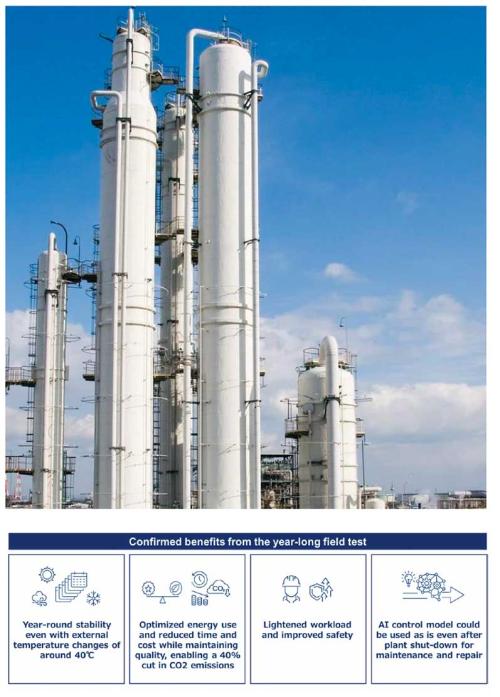

Returning to the use of AI in chemistry, I found a report that suggested the key areas of deployment will be molecule property prediction, molecule design, retrosynthesis, reaction outcome prediction, reaction conditions prediction, and chemical reaction optimisation.

If you ponder that list properly, it suggests that AI in chemistry will be used as a better means to solve complex model-based chemistry problems. That is, it will be used as an optimisation approach to replace current numerical approaches, rules of thumb and human insight.

I can’t resist the temptation to be a futurologist myself from time to time. To me, the impact of AI on chemistry is going to pale next to that of the use of quantum computing in molecular quantum chemistry. If and when we have fully functional quantum computers with millions or even billions of error-free qubits, it will be possible to solve Schrödinger’s equation for any molecular situation without having to make the current kind of assumptions that generally reduce the usefulness of molecular quantum chemistry to a very mathematically frustrating academic activity. Quantum chemistry has been around since 1927 when Walter Heitler published the first recognised paper in the area. To this day, I have not found one quantum chemist who can name a single practical application of molecular quantum chemistry outside of academia (if one excludes the application of quantum mechanics to solid state non-molecular materials such as inorganic semiconductors).

Please note, this comment absolutely is not a criticism; it’s just to note that it’s a field that is waiting on the availability of new technologies so that it can have its day in the sun. Those new technologies will be quantum computing (for the processing of ab initio equations that define molecular properties, without the current value-destroying assumptions) and AI (to optimise said processing for a specific desired outcome).

On the topic of quantum computing, I am chair of a quantum computing start-up (Eigensystems) and this company has developed an educational product (the Quokka) so that people can learn to program and use quantum computers. (It is sort of analogous to the 1980s Tandy Color Computer in the digital computing era – if you are old enough to remember that.) Eigensystems uses sophisticated techniques to emulate a quantum computer – it’s a $500 product that emulates a quantum computer that would cost many millions of dollars, and it does so without any errors, unlike real quantum computers.

Quantum computing as a field of endeavour goes back to the 1980s, and to this day, although heavily hyped, is still effectively an investor-driven boondoggle. The largest functioning quantum computer in the world currently has 433 qubits, but to quote my expert ‘it’s as noisy as [*#@!]’. Currently no resources in this device are employed to correct errors. The only way to use it is to run programs that are so small that statistically few if no errors actually occur.

Google has demonstrated an error-corrected qubit (that still doesn’t work properly) on a 53 qubit chip. It gave them one effective encoded qubit, but only reduces error rates by a fraction of a fraction of one per cent.

Generally, in quantum computing, the figure of merit is the ‘quantum volume’ – which effectively quantifies the size of the quantum program that can be successfully run. The largest quantum volume to date by a real quantum computer is 524 288. For comparison, Eigensystem’s $500 quantum computer emulator has a quantum volume of approximately one billion. So, please don’t hold your breath, folks … practically speaking, I wouldn’t be surprised if quantum chemistry has to wait for another 100 years to have the tools in place to finally take off, such is the challenge faced by the hardware developers in quantum computing. I believe the relevant AI technologies will be available well ahead of that time.

Generally speaking, I feel that as application-specific AI technologies become slowly available, society will adapt to them. It’s this slow pace of availability that has enabled society to already adapt to the millions of life-changing technologies that have been invented since the start of the Industrial Revolution. Despite the warnings of Aldous Huxley and social media, apocalyptic visions of billions of unemployed people enslaved to the desire of machines haven’t eventuated as yet. For chemistry and chemists, I think it’s all upside, and it’s worth noting that embracing change is probably a good way to not become a victim of it.